Let's Learn How Systemd Works

Table of Contents

The article discusses the management of systemd services. If you like systemd’s approach, check out Earthly. Earthly streamlines the build automation process for services of all types. Check it out.

Before I decided to write this article, I knew systemd was the init process in Linux and that it was the process under which all other processes ran. I had run my share of systemctl commands, but truthfully, I just never really needed to learn about it beyond that. I never thought much about how systemd knew which processes to run or what else it could do. I just knew that when a process started, somehow, systemd would take over.

There are some excellent resources out there that go into detail about how systemd works, but what helped me start to understand it was setting it up to manage a service myself. In this tutorial, we’ll take a simple golang program and set it up to run in the background with systemd. We’ll ensure it restarts if it gets killed, and we’ll also make sure systemd starts the process on boot. Doing so will allow us to take an in-depth tour of how systemd works and what features it offers.

What Is the Init Process?

In the world of Linux processes, the init process is king. It’s the first process to start after the kernel is loaded, and all other processes run beneath it. The init process starts by initializing any processes needed to bring the machine to a functioning state. After that, it’s responsible for starting and stopping processes while the system is running, as well as terminating processes safely when the system is shutdown.

The Linux Boot Process

The boot process from the time you press the power button to the time you are able to use your OS is extremely complicated. This article will largely focus on systemd after Linux is done booting; however, it’s worth familiarizing yourself with what happens before systemd is even invoked. I found this chapter on System Initialization from the Debian website to be a good summary. It remains specific without getting bogged down in too much detail.

In older versions of Linux, the init process was a shell script or series of shell scripts. However, most modern Linux distros have replaced the shell script with a binary program called systemd 1. Systemd is a newer init system that is designed to improve performance and simplify service management. It uses configuration files known as units to define and manage services, and it provides advanced features like parallel service startup, socket activation, and on-demand service launching. It also manages logs. That’s a lot for one program to do, which is why systemd is more than just a program; it’s actually a suite of tools that work together.

I should note that not all Linux distributions use Systemd, but many have made the switch. You should check which init processes your specific Linux version uses to be sure.

Here’s a list of some popular Linux distributions that use systemd:

- Ubuntu 15.04 and later versions

- Debian 8 (Jessie) and later versions

- CentOS 7 and later versions

- RHEL 7 and later versions

- Fedora

- Arch Linux

A Quick Look At Parent and Child Processes

Let’s take a quick tour of Linux processes before we get started setting up our golang app to be managed by systemd.

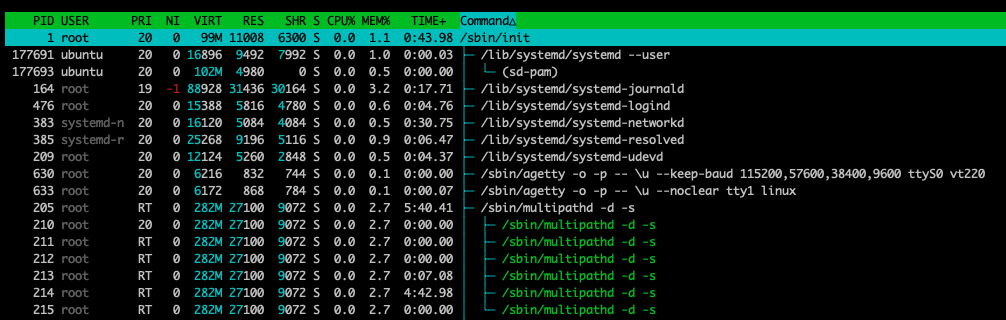

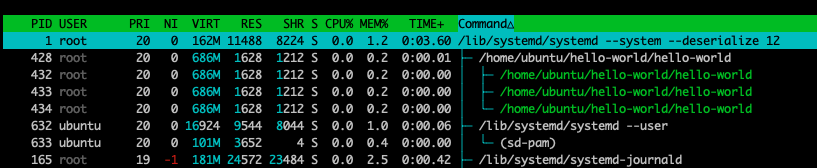

I have an Amazon EC2 instance running Ubuntu 22.04.2 LTS. We can use htop to get a list of all the running processes. Once in htop, pressing t will give us a tree view so that we can see which processes are running under other processes.

Take a look at the top process, the one with process id (PID) 1, /sbin/init. It has PID 1 because it’s the first process that started when we booted this instance of Linux. You’ll also notice that in our tree view, all other processes exist in branches underneath /sbin/init.

But wait, I thought systemd was the init process? Let’s go to /sbin and take a look.

ubuntu@ip-xxxx:/sbin$ ls -lah | grep init

lrwxrwxrwx 1 root root 20 Mar 20 14:32 init -> /lib/systemd/systemdIf we take a closer look at init, we can see that it simply symlinks to /lib/systemd/systemd. So really, systemd is running all of our processes.

Our Process

So that we can look at how to set up a process to be managed by systemd, we’ll use this simple Go program that prints “Hello World” every second.

package main

import (

"fmt"

"time"

)

func main() {

for {

fmt.Println("Hello World")

time.Sleep(1 * time.Second)

}

}The code and the executable are in my home directory.

ubuntu@ip-xxxx:~/hello-world$ ls

go.mod hello-world main.goLet’s run it.

ubuntu@ip-xxxx:~/hello-world$ ./hello-world

Hello World

Hello World

Hello World

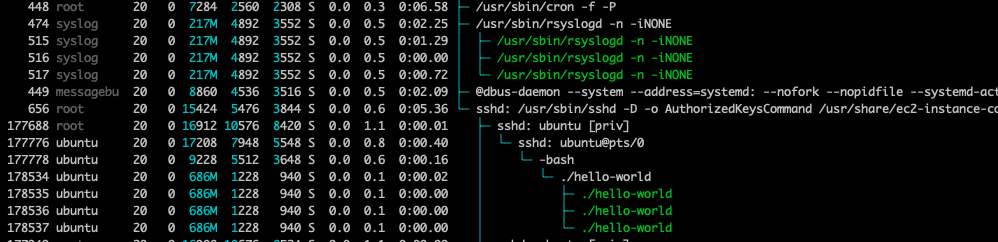

...And while it’s running, in a separate tab, we can open htop.

This image is zoomed in, so we don’t see the init process at the top, but it’s still there, watching over everything. We can follow the tree down to ssh (which is how I’m connecting to the instance). Ssh has its own child processes it runs. Under one of those is our shell, bash, which is our environment where we just ran hello-world. Then finally, under that, we have our hello-world process. (The presence of three child processes when running a simple Go program that prints a string is likely due to the way the Go runtime and the operating system handle certain aspects of process creation and management.)

What Are Systemd Units?

When we talk about long-running processes in Linux, it’s common to refer to them as services or daemons. Systemd calls them units. A systemd unit can be a service, like our hello world program, but it can also be other things that systemd is capable of managing, such as sockets, mounts, or targets. (More on targets in a bit.)

Units are defined in unit files. A systemd unit file is a configuration file that defines various system components. Each unit file contains information about the unit’s behavior, dependencies, startup conditions, and other settings needed for systemd to manage and supervise the corresponding system resource.

These unit files live in several different places. It’s helpful to become familiar with a few of them.

Custom System Unit Files -

/etc/systemd/system/: Administrators can create custom system unit files to define new services or modify existing ones. Files in this directory take precedence over those in the system directories, allowing you to override or extend the default settings. This is where we will create our own unit file for ourhello-worldservice.Standard Unit Files -

/lib/systemd/system/: Unit files for system wide units. These are usually related to applications that come preinstalled and are managed by the distro maintainers.Installed Package Unit Files -

/usr/lib/systemd/system: Generally this is where your package manager will put unit files for installed packages. This will happen automatically as part of the install process. For example, nginx puts several unit files here after it is installed.

How to Create Your Own Systemd Unit

So now we can create our own systemd unit file to manage our hello-world process. Create a file called /etc/systemd/system/hello-world.service.

[Unit]

Description=Hello World Service

[Service]

ExecStart=/home/ubuntu/hello-world/hello-world

[Install]

WantedBy=multi-user.targetMost interesting here is the ExecStart, which tells systemd how to start our service. WantedBy lets us set up any dependencies our program will need to run. We could use this to declare another service, but it’s also common to declare a target. Targets are groups of units, and they are often used to specify certain states you may want your system to be in, similar to runtimes in Unix. In this case, setting the multi-user.target ensures the system is in a state where we have a multi-user environment that can be interacted with. Declaring it here is telling systemd it should start our hello-world service when it reaches the multi-user target state.

If you ever need to know what other units are part of a certain target, that’s easy to check. Targets are another form of unit that systemd can manage, and they are configured the same way as our service; in a unit file. We can find the unit file for the multi-user target at /lib/systemd/system/multi-user.target, but that’s not going to show us all the dependencies since they won’t all be declared in one file. To see all the dependencies for the multi-user target (or any unit), we can run:

ubuntu@ip-xxxx:~$ sudo systemctl list-dependencies multi-user.target

multi-user.target

● ├─apport.service

● ├─chrony.service

● ├─console-setup.service

● ├─cron.service

● ├─dbus.service

○ ├─dmesg.service

○ ├─e2scrub_reap.service

○ ├─ec2-instance-connect.service

○ ├─grub-common.service

○ ├─grub-initrd-fallback.service

○ ├─hibinit-agent.service

○ ├─irqbalance.service

○ ├─lxd-agent.service

● ├─networkd-dispatcher.service

○ ├─open-vm-tools.service

● ├─plymouth-quit-wait.service

● ├─plymouth-quit.service

....This output is cut off to save space, but you get the idea. Notice we don’t see our hello-world program listed yet. We’re getting there.

Just creating the unit file won’t get systemd to start managing our program. We’ll need to do two more steps. First, we need to get systemd to read our new .service unit file. We can do that with:

systemctl daemon-reloadThis causes systemd to reread all the unit files. Now that systemd knows about our unit, we can check the status of our service.

ubuntu@ip-xxxx:/etc/systemd/system$ sudo systemctl status hello-world○ hello-world.service - Hello World Service

Loaded: loaded (/etc/systemd/system/hello-world.service; enabled; vendor preset: enabled)

Active: inactive (dead)Disabled vs Inactive

Systemd units have two states that can be set. They sound very similar, but they mean two different things. It’s important to understand the difference.

Enabled/Disabled: TLDR; Can systemd automatically start this unit?

In the second line of output, toward the end of the line, you’ll notice your unit will have a status of either enabled or disabled. This simply indicates whether or not systemd can automatically start the unit. You may have this unit set to start on boot, or it may be a dependency of another unit that wants to start it. If your unit is set to disabled, then systemd won’t be able to start it in both of those cases.

Active/Inactive: TLDR; Is the unit running?

When a service unit is inactive, it means that the unit is not currently running or actively providing its services. However, this does not necessarily mean that the unit is permanently disabled. An inactive unit might still be enabled and can be started when needed.

Right now, our service is inactive. So let’s start it up and then check the status again.

ubuntu@ip-xxxx:/etc/systemd/system$ sudo systemctl status hello-world● hello-world.service - Hello World Service

Loaded: loaded (/etc/systemd/system/hello-world.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2023-07-20 20:51:37 UTC; 14s ago

Main PID: 179038 (hello-world)

Tasks: 3 (limit: 1141)

Memory: 564.0K

CPU: 3ms

CGroup: /system.slice/hello-world.service

└─179038 /home/ubuntu/hello-world/hello-world

Jul 20 20:51:42 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:43 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:44 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:45 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:46 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:47 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:48 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:49 ip-xxxx hello-world[179038]: Hello World

Jul 20 20:51:50 ip-xxxx hello-world[179038]: Hello World

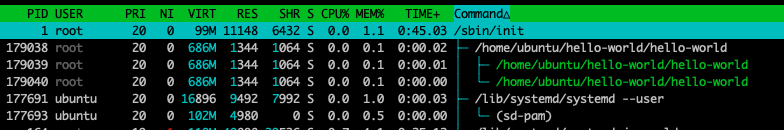

Jul 20 20:51:51 ip-xxxx hello-world[179038]: Hello WorldNotice how the status has switched from inactive (dead) to active (running). Also, notice that below the status info, we get the last ten lines of the log, which is pretty cool. Let’s check htop again and take a look at where our hello-world process shows up on the tree.

We can see here that instead of showing up under bash, where we ran the process from before, it now shows up as a direct child of /sbin/init, which is systemd.

We can also see that if we check the dependencies for multi-user target again:

ubuntu@ip-xxxx:~$ sudo systemctl list-dependencies multi-user.target

multi-user.target

● ├─apport.service

● ├─chrony.service

● ├─console-setup.service

● ├─cron.service

● ├─dbus.service

○ ├─dmesg.service

○ ├─e2scrub_reap.service

○ ├─ec2-instance-connect.service

○ ├─grub-common.service

○ ├─grub-initrd-fallback.service

● ├─hello-world.service

○ ├─hibinit-agent.service

○ ├─irqbalance.service

....Hey, check it out, the 11th in the list is our hello-world.service! This is great. Now our service is running in the background. What happens if we kill it?

ubuntu@ip-xxxx:~$ sudo kill 179038Our unit becomes inactive, as we would expect. We can also see where it was deactivated in the logs.

○ hello-world.service - Hello World Service

Loaded: loaded (/etc/systemd/system/hello-world.service; enabled; vendor preset: enabled)

Active: inactive (dead) since Mon 2023-07-24 19:18:25 UTC; 1min 41s ago

Process: 179038 ExecStart=/home/ubuntu/hello-world/hello-world (code=killed, signal=TERM)

Main PID: 179038 (code=killed, signal=TERM)

CPU: 39.210s

Jul 24 19:18:18 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:19 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:20 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:21 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:22 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:23 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:24 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:25 ip-xxxx hello-world[179038]: Hello World

Jul 24 19:18:25 ip-xxxx systemd[1]: hello-world.service: Deactivated successfully.

Jul 24 19:18:25 ip-xxxx systemd[1]: hello-world.service: Consumed 39.210s CPU time.But what if we want the service to always be running? In that case, we can tell systemd it should restart the service when it dies. All we need to do is update our unit file.

We’ve got two options for telling systemd when to restart our service. Restart=always will make sure that systemd restarts the service no matter what. Even if we stop it manually. Restart=on-failure will tell systemd to restart the service only if the service exits with a non-zero status; basically, if it crashes.

[Unit]

Description=Hello World Service

[Service]

ExecStart=/home/ubuntu/hello-world/hello-world

Restart=always

[Install]

WantedBy=multi-user.targetNow lets start our service back up.

ubuntu@ip-xxxx:~$ sudo systemctl start hello-world

Warning: The unit file, source configuration file or drop-ins of hello-world.service changed on disk. Run 'systemctl daemon-reload' to reload units.Seems like systemd detected that our file had changed. That’s cool. We just follow the instructions in the error to get systemd to reread the unit file.

I’m not totally sure this is necessary in all cases, but I’m also going to restart the service.

ubuntu@ip-xxxx:~$ sudo systemctl restart hello-worldNow, if you check the status again, it should be active. So let’s kill it.

ubuntu@ip-xxxx:~$ sudo kill 190646And check the status again. It’s still active, but that’s because systemd restarted it faster than we could check the status and see that it was deactivated. No worries, though, because we can see right in the logs when the unit was stopped and when it was started back up again.

ubuntu@ip-xxxx:~$ sudo systemctl status hello-world● hello-world.service - Hello World Service

Loaded: loaded (/etc/systemd/system/hello-world.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2023-07-24 19:43:23 UTC; 2s ago

Main PID: 190658 (hello-world)

Tasks: 3 (limit: 1141)

Memory: 572.0K

CPU: 1ms

CGroup: /system.slice/hello-world.service

└─190658 /home/ubuntu/hello-world/hello-world

Jul 24 19:43:23 ip-xxxx systemd[1]: hello-world.service: Scheduled restart job, restart counter is at 1.

Jul 24 19:43:23 ip-xxxx systemd[1]: Stopped Hello World Service.

Jul 24 19:43:23 ip-xxxx hello-world[190658]: Hello World

Jul 24 19:43:23 ip-xxxx systemd[1]: Started Hello World Service.

Jul 24 19:43:24 ip-xxxx hello-world[190658]: Hello World

Jul 24 19:43:25 ip-xxxx hello-world[190658]: Hello WorldThis is just getting started with what we can configure systemd to do in our unit file. We could go on to set resource limits for our service, configure sockets, set file permissions, and more.

Journalctl

We could do a whole other blog post on journalctl, but I do want to mention it here briefly as it can be one of the great advantages of using systemd. When systemd manages a service unit, it automatically captures its standard output and standard error streams and stores them in the journal. This means that any log messages or output generated by the service will be recorded and made accessible through the journalctl command.

We can check logs for hello-world with journalctl -u hello-world. This will give you all the logs, which can be overwhelming to page through. More often, you’ll want logs from a certain time frame when debugging. For that you can use the --since and --until flags.

journalctl -u hello-world --since "2023-07-25 12:00:00" --until "2023-07-25 18:00:00"Conclusion

I really had a hard time narrowing down just what to cover in this article. Systemd is a far larger and more complicated subject than I had anticipated. What I’ve tried to do is provide a hands on introduction to some of systemd’s inner workings and hopefully recreate the lessons and bits of knowledge I learned that really helped me start to get a feel for systemd.

If you’re looking to fall even deeper down the rabbit hole, I’d suggest looking into the following subjects next:

Check out more about how systemd handles dependencies with

.wantsdirectories.Reading up on targets in more depth will help you grasp the concept of grouping units and how they can be used to achieve specific system states or goals. Targets are powerful tools for managing the system’s behavior during boot-up or when switching between different system modes.

Spend some time examining existing unit files for services like SSH or Nginx. Studying these files will provide insights into how experienced administrators configure and manage these critical services using systemd.

What Happens If You Kill Systemd?

Lastly, since I know you were wondering what happens if you run sudo kill -9 1, I can tell you that on an Ubuntu EC2 machine, it does not trigger kernel panic or cause an explosive fire at Amazon, which is a bummer.

To understand why, we need to remember a couple things about the kill command. Using kill on a processes, by default, will send a SIGTERM. The SIGTERM signal can be caught by that process and used to handle gracefully exiting the program. But we can also send a SIGKILL signal to a process by adding -9, so sudo kill -9 <PID>. The SIGKILL signal can not be caught by a process, meaning there’s nothing you can do in your program to ignore or gracefully handle a SIGKILL. Your process gets terminated, and too bad for you. This is true of all processes…except for init. init is the only process that the Linux kernel lets catch and ignore a SIGKILL. So actually, sudo kill -9 1 does nothing. The SIGKILL get’s lost in the void. You won’t even see anything in the logs, other than the fact that you ran the command. This is great news, since killing systemd would certainly cause a kernel panic, which for an admin, is very very bad. But for those of us with a need to destroy, it’s just very very sad.

So if we can’t send SIGKILL, what about SIGTERM, SIGKILL’s much kinder, catch-able sibling? 2 Well, that we can do. Again, the results aren’t explosive, but they are a bit more interesting.

When I ran sudo kill 1, at first it seemed like nothing happened again. The system kept running, and I even stayed connected via ssh. The only change I noticed was that PID 1 had changed from /sbin/init to /lib/systemd/systemd --system --deserialize 12.

/sbin/init replaced by /lib/systemd/systemd --system --deserialize 12So I assumed there was some code somewhere that knows to restart systemd immediately whenever systemd receives a SIGTERM. When it restarts, it calls systemd directly rather than accessing it through the symlink. ( Or something like that. )

A little more digging and I found this on a few message boards:

“

--deserializeis used to restore saved internal state that a previous invocation of systemd, exec()ing this one, has written out to a file. Its option argument is an open file descriptor for that process.”

So whatever fail-safe is in place seems to restart systemd and restore it to a previous saved state, which is why I didn’t really notice any disturbance. Pretty neat. I guess I’ll need to find something else to break.

Earthly Cloud: Consistent, Fast Builds, Any CI

Consistent, repeatable builds across all environments. Advanced caching for faster builds. Easy integration with any CI. 6,000 build minutes per month included.

It’s important to mention right away that there was a lot of controversy about the switch to systemd and that I do not care.↩︎

SIGling?↩︎